Free download CCNA Dumps

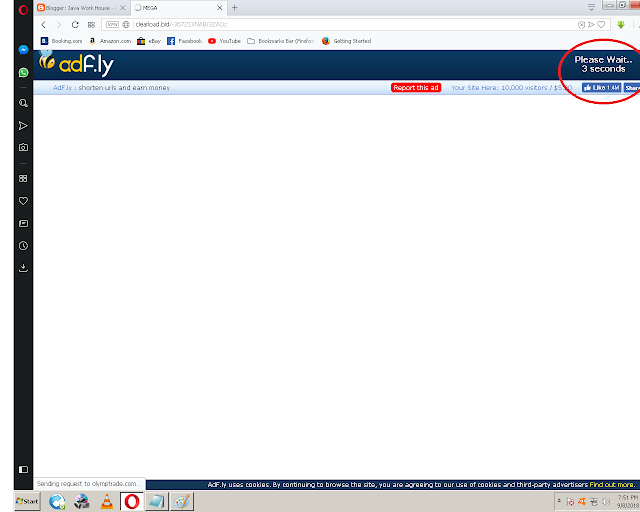

Free Download CCNA Dumps, Security Handouts,Lab Practice, Python Guide, Important Questions answers and many more at one place. Download method: 1. Click on any required book link 2. Wait 5 sec to preview ad. Note: "You must have turn off ad blocker if using any" 3. Click on "Skip Ad" button to go on real link 4. Wait to load mega page 5. Click on " Download " button if you are on computer or click on " Open in browser " button in mobile. VLSM Workbook.pdf 74 KB http://zipansion.com/3ZABF IP Addressing & Subnetting Workbook.pdf 190 KB http://zipansion.com/3ZACa Access Lists Workbook.pdf 213 KB http://zipansion.com/3ZAD5 gratisexam.com-Cisco.Prep4sure.100-105.v2016-07-07.by.Greg.35q.pdf 218 KB http://zipansion.com/3ZADj 200-125-ccna-v3.pdf 246 KB http://zipansion.com/3ZAE9 [Mar-2018] New PassLeader 200-125 Exam Dumps.pdf 250 KB http://zipansion.com/3ZAEm Tutorial de Subneteo Clase A, B, C.pd...

Comments

Post a Comment